At Indeed, we have an unrelenting obsession with speed. We believe site performance is critical to providing the best experience for job seekers. Since Indeed launched in 2004, traffic growth has been swift and steady. Today, we have over 85 million unique visitors and over 2 billion searches per month. Traffic to Indeed continues to rapidly increase, so ensuring our systems scale with this traffic really matters. In order for our site to be fast and reliable, we developed Boxcar, an RPC framework and load distribution strategy for interacting with remote services using a generic layer to transport raw bytes.

Considerations

Before we developed Boxcar in 2010, Indeed’s main search site was powered by a single web application. In 2009, we split this application into two separate but highly-coupled server processes to address some bottlenecks, but this was only a stop-gap solution. The two processes were always deployed on the same machine to simplify configuration and minimize potential protocol compatibility issues. This solution unfortunately prevented us from maximizing hardware utilization, increased vulnerability to certain kinds of failures, and required a carefully managed deploy process during our weekly releases.

We decided that we needed to implement a more general service-oriented architecture to improve system scalability. We considered various architectures and network topologies that were in common use. Some required expensive, custom hardware or larger numbers of commodity machines. Others introduced latency or additional points of failure. Dissatisfied with readily available options, we decided to develop something new.

The resulting solution includes an elegant algorithm that reliably routes requests from front-end applications to back-end services without a single point of failure or intermediate hops. This layer is itself protocol-agnostic, but we standardized on Google Protocol Buffers to provide structured remote service invocation with excellent forward and backward compatibility. When a service instance becomes unavailable, there is no disruption to our site as a result of the core algorithm adapting nearly instantaneously and routing requests only to the remaining available instances. It also transparently diverts traffic towards servers with faster response time.

Implementation

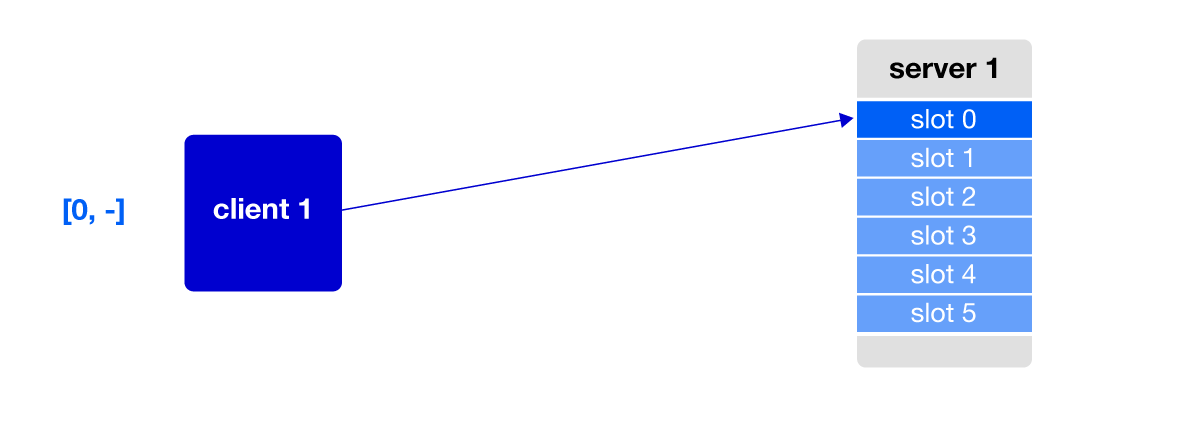

The basic Boxcar algorithm is simple. Each server maintains an ordered list of connection slots. Each client sends a given request to a server based on the lowest slot number available across the whole pool. This provides a lightweight mechanism for clients to balance requests without the need to directly measure server load.

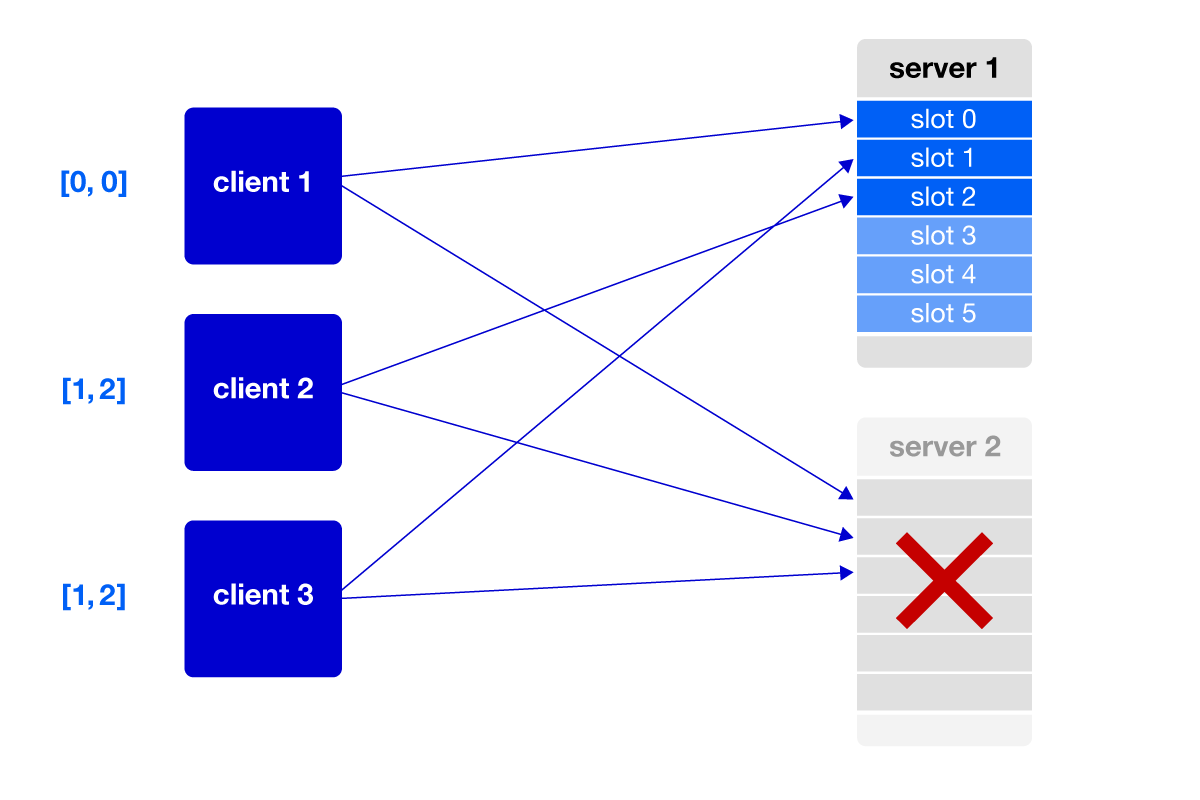

Figure 1: each client’s pool contains the lowest server slot number available

Servers give out their lowest available slot when a connection is established. The same slot number is never simultaneously associated with multiple active connections. The slot associated with a connection does not affect how the server treats requests on that connection; it is only on the client that this affects request distribution.

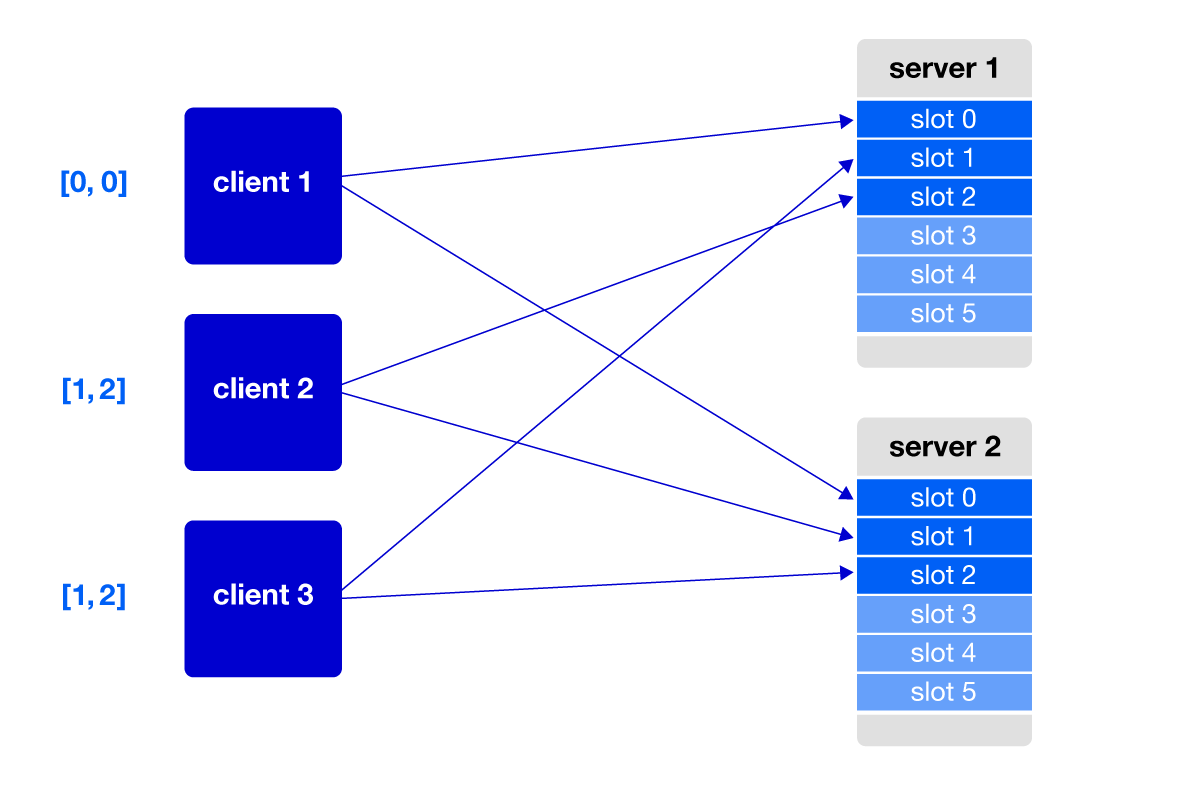

Each Boxcar client maintains a persistent, fixed-size connection pool that it constantly adjusts to maintain balance. When making a request, a client uses the connection from its pool with the lowest slot number. In the background, the client optimizes this connection pool. It establishes new connections to available servers even while servicing requests using already established connections. If a new connection has a lower slot number than any connection already in the pool, the client kicks out the existing connection and replaces it with the new one. Otherwise, it discards the new connection and sleeps briefly before trying again. The pool is managed by a specialized data structure that handles the non-I/O operations in a matter of microseconds.

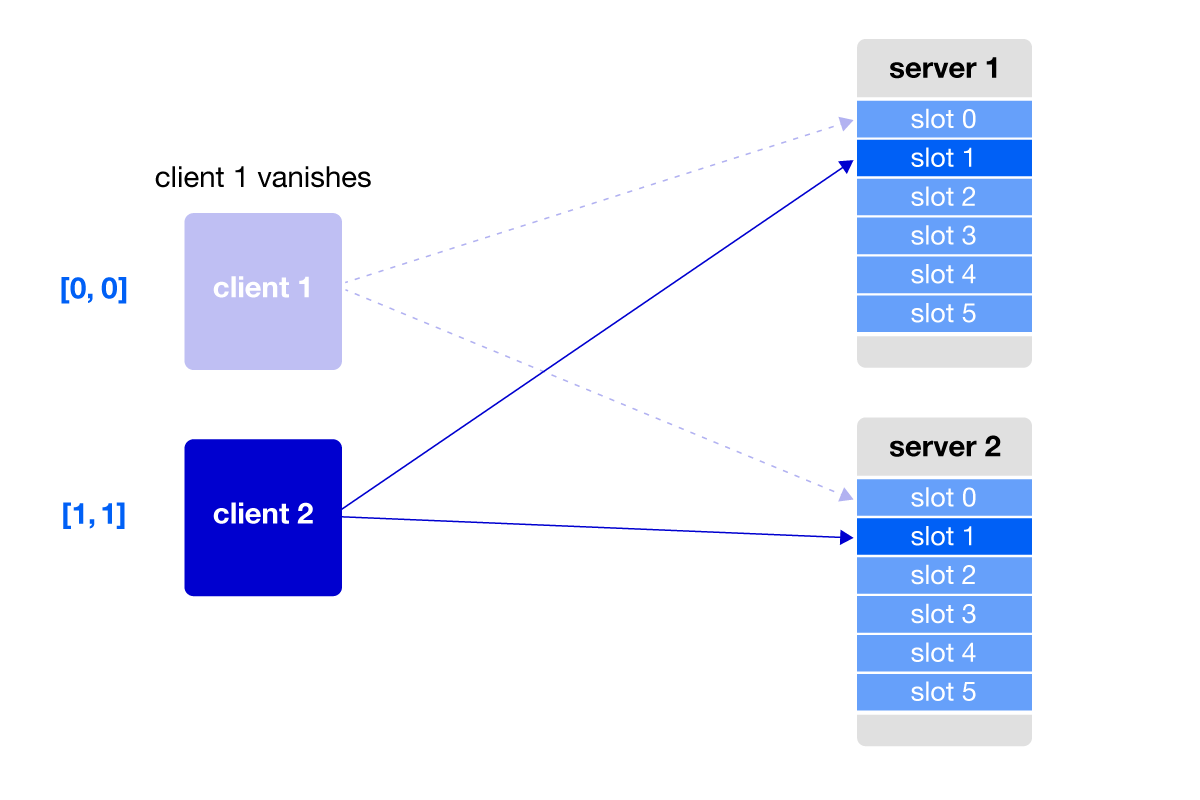

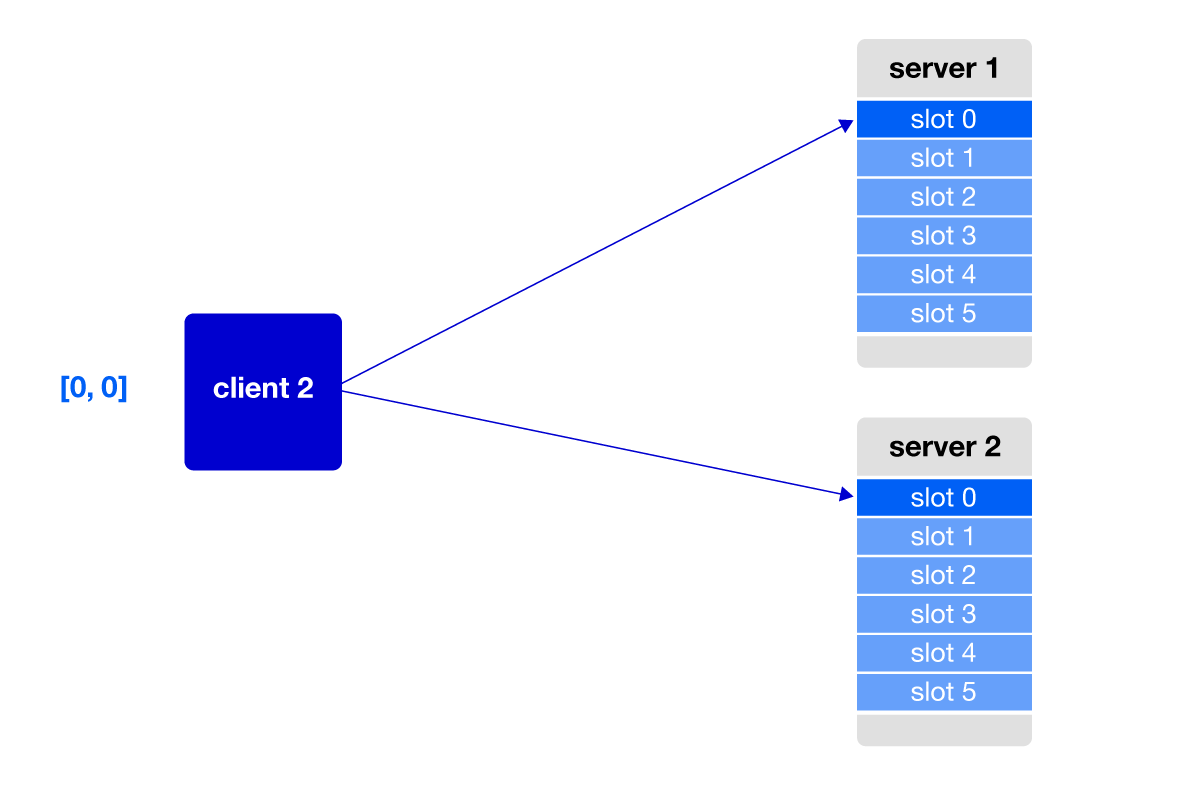

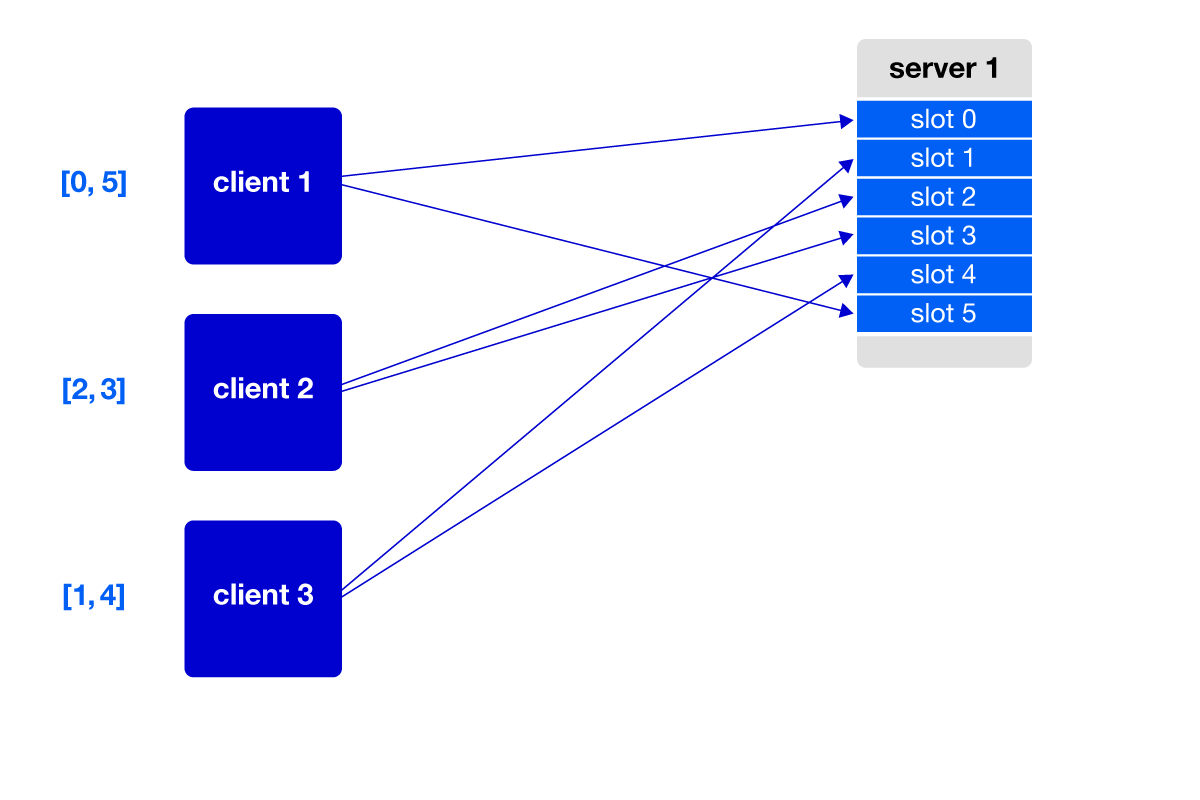

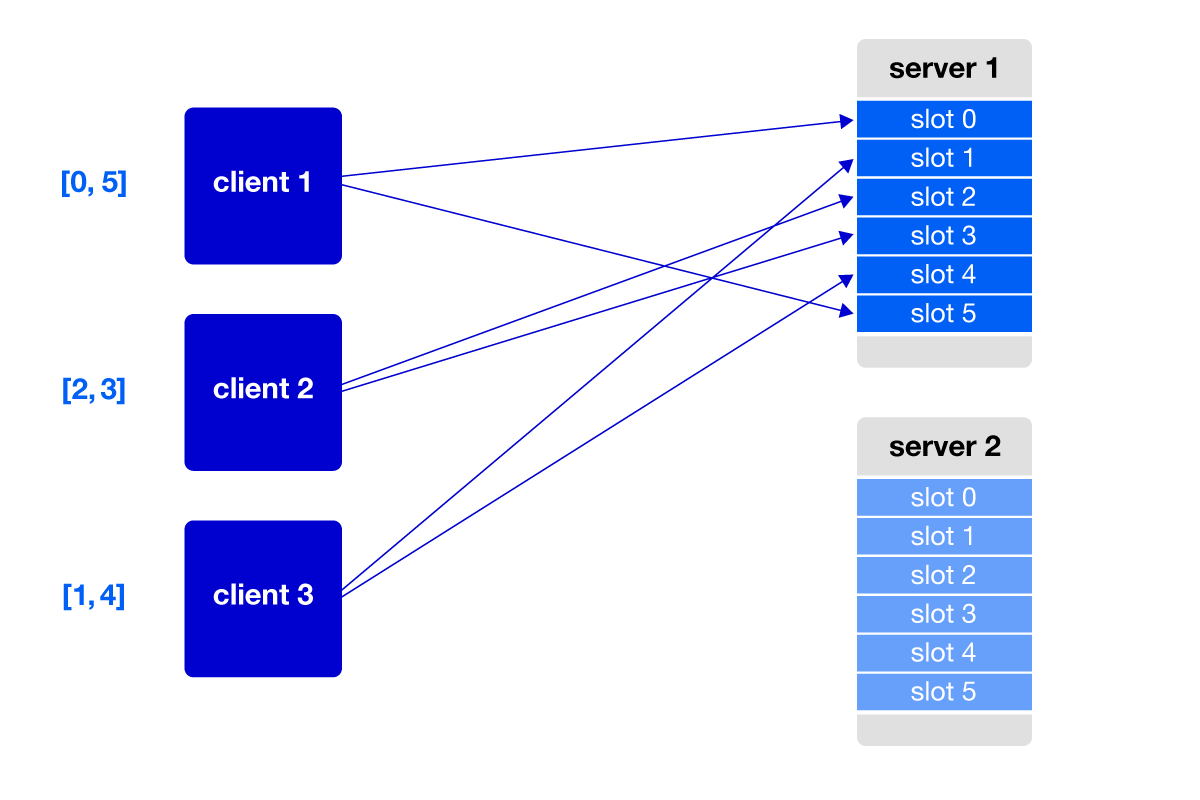

When a client goes away, the server reclaims the slot numbers associated with its connections. If these are low slot numbers, they are quickly allocated to the remaining clients. These clients then discard the connections they had associated with higher slot numbers in order to make room in their pools. (see Figures 2-3).

Figure 2: client with a low slot disappears

Figure 3: low slots are reclaimed and allocated

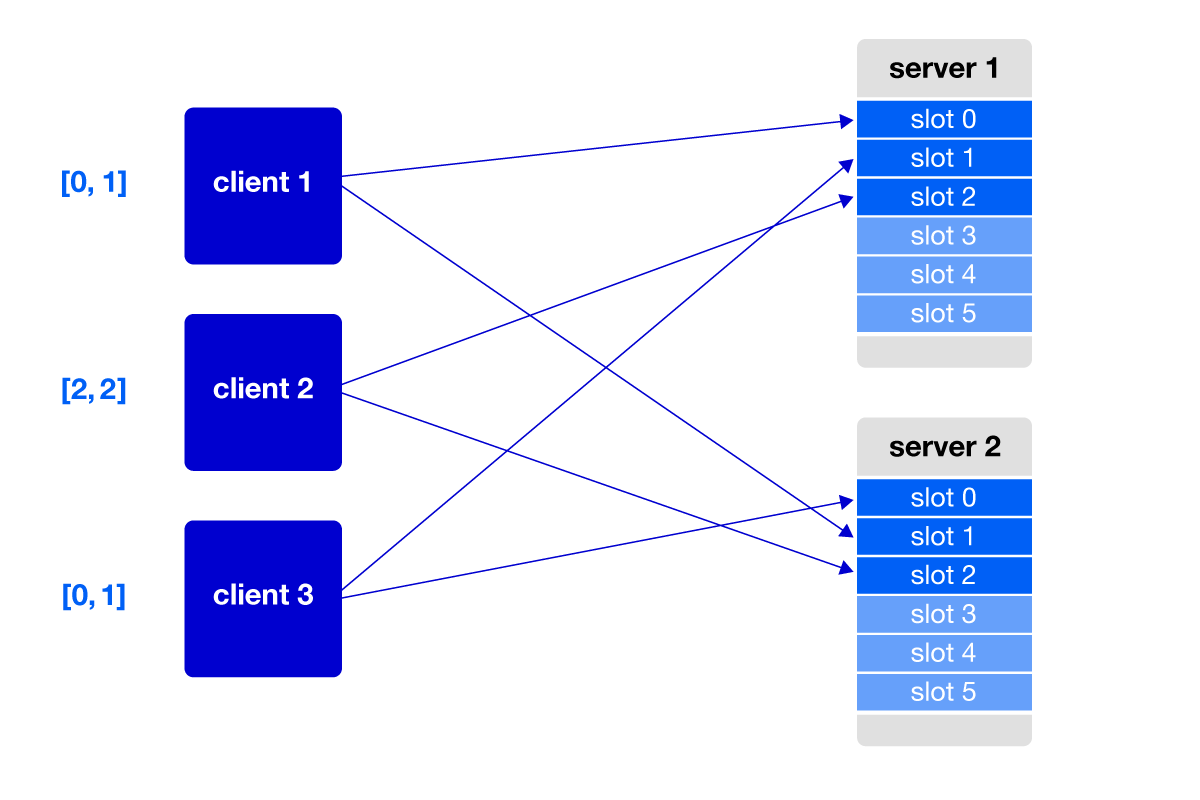

The net effect is that each client has pre-computed which connection will be used for the Nth concurrent request. Our clients are typically web applications that service simultaneous requests themselves. If a client only services one request at a time, every request will use the same connection — the one in the pool that has the lowest slot number. All other connections will sit idle. If the client services two concurrent requests, each request will use one of the two connections in the pool with the lowest slot numbers. The other connections will remain idle. This generalizes to as many concurrent requests as there are connections in the pool.

If there are more concurrent requests than there are connections, additional requests are rejected. We reject requests in this extreme condition in order to fail fast and prevent excessive load on the services. We size our connection pools based on expected peak traffic levels, so this rejection should only happen during extraordinary conditions.

If a remote service fails to serve a request, the client disposes of the associated connection and retries the request on the next best connection. The number of retries that can happen is bounded in configuration. Disposing of the connection frees up an additional space in the pool for the greedy connection acquisition threads to fill, which quickly restores the pool to capacity.

Given the random distribution of connections, the connection selected for retry could be to the same failing server. Failures due to unavailable servers tend to be fast, usually sub-millisecond in our networks, so the client will incrementally purge its pool of these connections. This continues until the client finds a connection to a live server, reaches its retry limit, or purges all connections in its pool. The latter two conditions both result in the request failing outright.

Figure 4: three clients seeking the best slots on two servers

Figure 5: server loss

Figure 6: following server loss, clients continue to find the best slots

Figure 7: a new server is added

Figure 8: clients connect to the best slots available

If any single server is slow, connections to that server are more likely to be busy. Additional requests from that client will need to be serviced using different connections. The client preference for lower slot numbers means that those are likely to be connected to different servers, thus avoiding the bottleneck. The overall effect is that slower servers will service fewer requests, meaning more load is distributed to the remaining servers. Servers that are running at half speed will receive half as many requests, on average.

Insights

Load balancing implies a strictly equal distribution of load across all instances. That degree of consistency is a difficult problem to solve, and we do not claim to have solved it. The key realization we had when designing Boxcar was that could achieve our goals by solving a much simpler problem.

Boxcar balances requests using an elegant strategy for allocating persistent connections to incoming requests. Our approach does not guarantee a uniform distribution of requests or load. Instead, we try to avoid sending too many requests to a single server. This is a considerably simpler goal to achieve and was based on two insights.

The first insight was that we don’t need to directly balance individual requests. Instead, we balance connections probabilistically and impose a stable connection selection heuristic. This indirectly results in good balance for individual requests. The balance is not perfect in every instance at every moment, but in aggregate, the results are well-balanced, and the outliers are brief and rare. In our use cases, the number of requests is a reasonable proxy for server load, so a relatively uniform distribution of requests results in a relatively uniform distribution of load.

The second insight was that we do not need to have uniform distribution of load across instances. Suppose there are 10 servers and 3 servers worth of load. The implied goal with load balancing is to have each server at 30% utilization. However, for our goals, it’s acceptable if at some moment we have 3 servers at 100% utilization and 7 servers at 0% utilization, or 5 servers at 60% utilization and 5 servers at 0%. Our goal is to stably and efficiently serve our site traffic. As long as no server exceeds its capacity to serve requests stably and in a timely fashion, we really don’t care how uniform the load is.

Results

We’ve now been using Boxcar for nearly three years. We use it in production, testing, and development environments. Today, well over a dozen different services across nearly all of our products use this framework, carrying many hundreds of millions of requests and many gigabytes of data each day.

With Boxcar, Indeed is able to scale well with low cost commodity hardware while maintaining high availability. Our networks are set up with a simple topology without any added latency or network hops. Failures of individual systems have almost no impact on our ability to serve our users. Our operational costs are lower because our systems are less fragile.

Over the years, Boxcar has delivered all of the benefits we hoped for and has proven durable in numerous different applications. It has proven versatile, bringing valuable scaling capabilities to a broad range of products that collectively generate many hundreds of millions of requests per day. The core of Boxcar combines a few simple concepts into a sophisticated, resilient system for load distribution. We will cover other interesting aspects of Boxcar in future posts.